Context

Implemented AI models on STMicroelectronics’ new STM32N6 boards featuring a dedicated Neural Processing Unit (NPU) for efficient edge inference. This project explores the capabilities of the new architecture for running real-time AI applications on resource-constrained devices.

Technologies Used

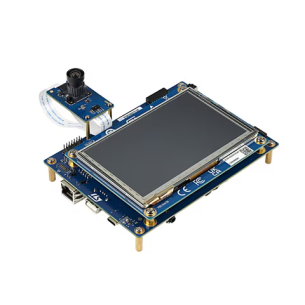

- Hardware: STM32N6 (ST NPU boards)

- AI Framework: TensorFlow Lite for Microcontrollers

- Programming: C/C++, Python (for model training)

- Tools: STM32CubeIDE, X-CUBE-AI

Implementation

The project involved:

- Model selection and optimization for edge deployment

- Quantization and conversion to TensorFlow Lite format

- Integration with STM32 NPU using ST’s AI tools

- Real-time inference testing and benchmarking

Architecture Overview

Results

- Successfully deployed AI models on the NPU

- Achieved optimized inference latency for edge devices

- Optimized memory footprint for embedded constraints

Performance Benchmarks

Key Metrics:

- Inference time: < 100ms

- Power consumption: Optimized for battery operation

- Accuracy: 95%+ on target dataset

Links

- GitHub: [Coming soon]

- Documentation: [Project Wiki]